Webinar

Who Gets Health Care and Why: AI, Race and Health Equity

Time & Location

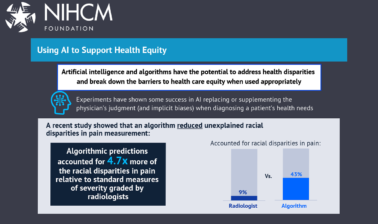

Rapid advances in artificial intelligence (AI) are transforming the way physicians and hospitals view and provide medical care. Yet, the latest evidence suggests the common practice of race-correction in clinical AI often exacerbates longstanding inequities in health outcomes and the type of health care received by Black Americans, Latinos, Asian Americans, and other medically underserved groups.

Algorithms that correct for race are currently used to inform treatment across specialties, including obstetrics, cardiology, nephrology, and oncology, even though race has not been proven to be a reliable indicator of genetic differences.

For this NIHCM webinar, leading researchers in the field explored:

- The scope and likely impact of clinical AI on health disparities and guidelines for considering race in diagnostic algorithms.

- How we can design clinical AI algorithms that can improve health equity and reduce the impact of systemic racism on health.

- The role that health care organizations and health plans can play in creating AI that supports health equity.

0:50

Hi, everyone, thank you so much for joining us this afternoon. I am Sheree, Crute, Directed Communication at the National Institute for your Management Foundation (NIHCM). And today, we're going to talk about one of the hottest topics in healthcare, artificial intelligence tools, in this case, algorithms. And how they impact access to treatment and other types of care.

1:17

Using AI data that includes race as a factor, or correcting for race, as it's sometimes called, is a recent practice that has influenced care across many medical specialties, including cardiology, obstetrics. Urology, one of the best-known instances was nephrology, the treatment of kidney disease.

1:38

In each of these cases, the intent was to use AI to improve the care people received. Yet, in many instances, the unintentional effect has been to increase existing racial disparities and inequities in health care, especially among Black and Latino patient populations.

1:59

A recent ... funded study, conducted by Ziad Obermeyer, and published in the Journal Science, revealed a powerful example of this type of effect.

2:09

Obermeyer and his colleagues found that an algorithm widely used by American hospitals to determine who needed care was systematically discriminating against black patients, millions of black patients.

2:22

The other rhythm was less likely to refer black patients for needed treatment and white patients, even though that, that study group of black patients had roughly 26% more chronic conditions.

2:37

The algorithm was not designed to discriminate based on race.

2:41

It was created to assess health care needs based on health care cost and expenditures. The inputs into the system, however, showed lower costs for the black patients. Even though they were sicker than the white population, resulting in them going without needed care for a number of chronic illnesses.

3:02

Addressing problems like these is critical, as AI is clearly the future of health care. The expert speakers we have with us today are going to do exactly that. They're going to help us understand how AI tools like these unintentionally exacerbate the impact of existing racial disparities and racism in the practice of medicine. How companies are changing to address this problem?

3:30

And what solutions we can put in place to provide better care to absolutely everyone.

3:37

This webinar is the fifth in our NIHCM series, stopping the other pandemic systemic racism, and health, in which we explored the links between racism and health inequities in Black, Latino, Native American, and other underserved communities in the United States.

3:54

But, before we hear from our speakers, I want to thank our President and CEO, Nancy Chockley and the NICHM team who helped us curate and pull together today's event. In. addition, if you go to our website, you can get full biographies on all of our speakers along with today's agenda and their slides.

4:16

And we also invite you to live tweet during our webinar, using the hashtag, AI, and Health Equity: spelled Out: AI, and Health Equity. We will also take as many questions as time will allow after all of our speakers have finished their presentation.

4:35

I am now pleased to introduce our first speaker, doctor David Jones. Doctor Jones is the Ackerman Professor of the Culture of Medicine at Harvard University. His research has explored the causes and meanings of health inequalities among Native Americans in cardiac care and in many other areas. Notably, for today's session, He, his research on reconsidering the overall use of race as a factor in the practice of medicine, especially when allocating resources through algorithms. As part of his recent work, is now pursuing three new projects on the evolution of coronary artery surgery on Heart Disease and Cardiac Therapeutics in India, and on the threat of air pollution to health. He teaches the history of medicine, medical ethics, and social medicine at Harvard College and Harvard Medical School.

5:28

Today, he's going to talk to us about the scope and likely clinical impact of race correcting algorithms. Doctor Jones?

5:42

OK, thank you for the opportunity to speak today about the difficult challenges that race and racism pose for the uses of artificial intelligence in health care.

5:56

Medicine, as many of you know, has a long history of racism stretching back for centuries.

6:02

Efforts to reckon with this history have intensified over the past year, in part because of the protests that followed the murder of George Floyd.

6:09

And in part, because of the ways that ... has, once again, laid bare, the scars of racism in America.

6:18

Clinical medicine faces an especially difficult question, should doctors treat ... patients of different races differently because of their race and ethnicity?

6:29

They clearly do.

6:31

Researchers have documented many regrettable cases in which disparities in medical treatment reflect structural, institutional, or interpersonal racism.

6:43

I want to focus today on a different set of cases in which doctors treat people of different races differently, deliberately, because they think it is the medically correct thing to do.

6:54

Race based medicine often has a defensible logic.

6:58

Researchers have documented race differences in disease prevalence and therapeutic outcomes.

7:04

Clinicians in response have factored race into diagnostic tests, risk calculators, and treatment guidelines.

7:12

Many of these rais suggested tools direct medical attention towards white patients.

7:18

This is perverse at a time when people of color suffer higher mortality rates from many diseases.

7:27

Here's one tool the stone score that predicts the risk of a kidney stone in someone with flank pain.

7:34

The score assesses several factors with a high score indicating higher risk.

7:39

If you look, you will notice that non black race is waded as heavily as having blood in your urine.

7:48

This systematically assigns white people to a higher risk category, and directs medical attention and resources, in this case, a cat scan towards them.

8:01

Here's another tool, one that predicts whether a pregnant person should attempt a vaginal birth after they've had a prior ... section.

8:10

The researchers studied outcome data from over 7000 women and found several factors associated with a high risk of bad outcome, including weight, black, race, Hispanic ethnicity, insurance status, marital status, and tobacco use.

8:27

But when they put these findings into their risk category into their risk calculator, they included weight, race, and ethnicity, but not the other factors, as if somehow they felt race was more important than the others.

8:43

Last summer, I co-authored a paper with several colleagues about these practices of race adjustment, race correction, and race norming. We described 13 tools from different medical specialties.

8:55

Several of these required on a very thin evidence base.

8:59

Many of the tools rely on a dichotomous variable, black, or non black.

9:04

We feared that these tools, if used as directed, would exacerbate health and disparities.

9:11

No tensions last summer about the issue of race correction, were inflamed by allegations that the National Football League used race specific norms for psychological tests in order to deny concussion settlements to retired black players.

9:30

These efforts prompted and energized congressional hearings and inquiries.

9:34

The House Ways and Means Committee, for instance, issued a request for information.

9:39

Many medical societies responded and re-examine their use of race and committed themselves to an anti racist agenda.

9:45

Several announced plans to stop using race and their clinical algorithms. two of the most prominent tools. A kidney function test and the Vaginal birth Risk Calculator, have now been reformulated without race.

10:00

But race cannot be ignored.

10:02

Health inequities are ubiquitous in medicine.

10:05

We must study race and racism, if we are to eradicate inequity, Medicine must learn how to be race conscious without being racist.

10:15

Suppose researchers develop a tool that has a robust empirical justification for race didn't estimate and suppose that that tool could alleviate health inequities.

10:25

Would this be a case of appropriate, race conscious medicine?

10:29

Such tools certainly exist, should they be used?

10:32

Before answering that question, it is important to consider the possible harms of this kind of race based medicine.

10:40

Now, this is a fundamental, an ancient question, are there there is a fundamental and ancient question underlying all of this?

10:46

Are humans basically the same or not?

10:49

Medicine currently operates on the assumption that we are different and the doctors can improve outcomes by focusing on race differences.

10:56

There certainly are many forms of genetic differences between humans, the most dramatic is between people who are X X or X Y in their chromosomes typically women or men.

11:07

There are also subtle or genetic differences between people with different ancestry.

11:11

On average, people of the same genetic genetics *** differ at 0.1% of their genetic loci.

11:19

And some of the differences clearly do matter.

11:21

Genetic variants can have powerful effects such as the alleles that cause sickle cell disease or Huntington's disease.

11:28

There are many common diseases whose course and severity are influenced by genes.

11:33

And yes, there are some medically relevant differences between people of different ancestries. Tay-sachs disease is more common in people of Ashkenazi. ancestry.

11:43

Sickle cell trait is more common amongst people with West African ancestry.

11:48

But there is a danger in applying these differences carelessly.

11:52

Even though sickle cell trait is 25 times more prevalent in african americans than in white Americans, most african americans don't have it.

12:01

It would be wrong to treat all african americans differently because of a trait carried by a minority of them.

12:11

How do genetic and racial differences actually matter? Or how much do they actually matter in routine clinical practice?

12:18

This is an empirical question, and I've never actually seen anyone produce a good answer.

12:23

For instance, what percent of clinical encounters are significantly shaped by a genetic variant, especially one with a racial distribution?

12:32

If you look at the most common causes of emergency room visits, chest pain, mental pain, back pain, headache, cough, shortness of breath, genetic factors might contribute, but they are not decisive.

12:43

If you look at the leading Causes of Death, Heart Disease Cancer coven accidents Genetic factors may again be relevant, but again, they are not decisive.

12:53

Now, I can assert but cannot prove.

12:56

The doctors could practice medicine just fine, in most cases, if they abandoned the use of race, in the diagnostic tools and treatment guidelines. Do continue to use crude race categories like black versus non black.

13:11

They risked causing several forms of harm, not talk about three, missed categorization, ratification, and distraction.

13:21

MIS categorization is the most obvious.

13:24

Many of the race based tools that are in practice rely on a black, non black distinction As if humans can be meaninglessly divided into these two groups.

13:33

Some clinical tools use five races and two ethnicities. Any of this scientific or evidence based?

13:41

Someone who identifies as Hispanic might have ancestry, that is 100% American, 100% African, 100% a European, or any mixed thereof.

13:51

Is Barack Obama black or non black?

13:54

That's simply not the kind of question that doctors should be asking.

13:59

In response to this concern, some researchers have begun to assert that race is useful, not as a proxy for genetics, but as a proxy for racism.

14:07

But imagine three patients that a clinician might meet. one descended from enslaved Africans, another a second generation Ethiopian immigrant.

14:15

And a third a student from a wealthy family from West Africa.

14:18

These people might have little in common in terms of their lived experiences of racism, little shared ancestry as well.

14:25

But our health care system would label them as one type, black, distinct from all other humans.

14:31

This simply makes no sense.

14:34

Ratification is subtler.

14:37

For centuries, scholars have debated whether race is a legitimate category.

14:40

A natural kind, substantial evidence now shows that human variation exists on gradual and continuous geographic gradients, without demarcation between traditional race categories.

14:51

It might be possible to develop sophisticated ways of classifying this variation.

14:56

But, instead of doing that, doctors continue to rely on traditional. That is to, say, 18th century, race taxonomies.

15:03

This reinforces the popular belief that the old race categories are real, legitimate and useful.

15:09

This exacerbates the divisiveness of race in America.

15:14

The third hermits distraction medicine is reflexive use of race diverts attention from other factors that are relevant, possibly more relevant to genetics and race.

15:24

Race is certainly a powerful force in American society, but so is class.

15:29

Researchers have documented innumerable social determinants of health, income, Zip Code, marital status, environmental exposures, adherence rates, and countless others.

15:38

Many of these have larger effect sizes than common genetic variants.

15:43

Should we adjust diagnostic tests based on zip code?

15:46

Should we initiate mammography earlier in poor women?

15:50

I don't think anyone has asked or answered these questions, like, They have about race.

15:56

Now I certainly recognize that it is easier to criticize practices, and to implement solutions, so I feel obligated before closing to make some specific recommendations.

16:05

As I said earlier, I am not calling for colorblind medicine.

16:10

As long as race and racism determine access to wealth, health, and social resources, we need to study them.

16:17

I support race conscious medicine.

16:19

We just need to figure out the best way for medicine to be race conscious.

16:23

Medicine needs to begin by transforming its data and descriptive statistics.

16:28

If we think human differences are important and should inform medical practice, then we need to invest the resources required to map and understand those differences.

16:38

There are genetic alleles that influence disease risk and treatment outcomes. Some occur at different frequencies, and people have different ancestries.

16:46

But if we want to use that knowledge, well, we cannot rely on the black versus non black distinction.

16:52

Instead, we need to develop better ways to measure and assess genetic variation.

16:57

Meanwhile, whenever we see a need to specify genetics or ancestry, when we think difference is important, we should also collect and report high resolution data about the social determinants of health.

17:08

They are likely to be more important than the genetic information.

17:12

And it's not just current socioeconomic status that matters, we need to figure out a way to measure the integrated sum of social and economic exposures over a person's lifetime.

17:24

Well, why does this matter for medical artificial intelligence?

17:28

The traditional tools that I have described are developed by researchers who chose which data to collect, who performed linear regressions to determine the variables that correlated most closely with outcomes, and then they built those factors into their tools.

17:42

Machine learning algorithms do something fundamentally similar.

17:46

Since an algorithm, and not a human user, is determining what variables, incorporate, or incorporated, this reduces some forms of interpersonal bias, some forms of racism, but it does not solve the problem.

17:59

As long as American health datasets excessively incorporate data about race and ethnicity while neglecting the socioeconomic status and other relevant exposures, then the AI algorithms will recapitulate social biases and conclude that race is important in medicine.

18:18

Studies have already confirmed this.

18:20

The obermeyer study mentioned in the introduction, describes an AI tool that predicted the risk of death in sick patients.

18:26

Since the toole considered health care spending, and since health care spending in the United States, reflects forms of racism, the tool inadvertently built racism intuits output.

18:38

The best way to prevent this is to pay close attention to the datasets that fuel machine learning algorithms.

18:45

We would ideally use datasets that either contain no markers of human difference to prevent to prevent the instantiation of racism, or use data sets that contain extensive measures of both genetic differences and socioeconomic status so that we end up with a complete picture of what really matters.

19:05

Guess, this will be hard to do.

19:07

But human science has accomplished many hard things before.

19:11

we sent 12 men to the moon and brought them back safely.

19:14

We developed powerful, covered vaccines in just one year.

19:17

Surely, we can figure out how to move beyond crude categories of racial categorization.

19:25

We need to commit collecting more comprehensive data about patients in both research and clinical practice, and we need to develop clever ways to analyze this data.

19:34

Our patients deserve better.

19:37

Thank you.

19:39

Thank you, doctor Jones, for that wonderful presentation.

19:44

Um, our next speaker, um, who is going to continue the discussion of how Race Influences Medicine, is doctor Fei Car Peyton, Doctor Patron is a professor of Information Technology and analytics at North Carolina State University, And she was named University Faculty Scholar for her leadership in turning research into solutions to society's most pressing issues. Doctor Pate works on projects related to Smart Health and Biomedical Research in the area of Artificial intelligence and Advanced Data Science. She has spoken extensively on the risk of digital discrimination exploring, AI, bias, artificial intelligence in healthcare. And race artificial intelligence, and systemic inequities today. She is going to discuss the intended, or unintended consequences of, including race and clinical algorithms, and how we can use AI to improve, not harm health for people from all racial backgrounds, Doctor Peter.

20:50

Thank you. Thanks for inviting me today.

20:56

So, I'll put this disclaimer out. It's great to be here.

20:59

And we will go ahead and get started.

21:02

So I will start with some research findings from a group of colleagues and I, who have been studying health care, and particularly using large datasets to discover any insights on particular health care conditions.

21:19

And I start with 2, 2 very enthusiastic people, I'm sure, many of whom you know, And this was our experience in the lab, thinking We got data, we got data.

21:34

And by that, we were excited.

21:36

We were ready to go out, do some analysis, and draw some conclusions, and come up with some recommendations.

21:44

So we have the data, as Picard would say, and everyone has data as open set.

21:51

So, Study one.

21:53

We took a look at the idea of diabetes in hospitalized patients, and our goal was actually to take a look at *** and race.

22:03

And we were particularly interested in core morbid conditions, the degree to which diabetes exists, but other health disparities, or, as defined by the CDC, are prevalent in a patient data set.

22:19

Our team, as we're talking about the data, we started out with, as you can see, almost 48 million wreckers discharge records over a huge latitude of time.

22:35

And we were pretty adamant that we going to be able to track patient A or patient B, all the way through this time period, from 2006 to 2011, as they had experience in the intake process.

22:52

And if they did have that experience, hospital experience, we whittled down, this is sort of a schematic to say, no, we're not really going to look at anyone that's admitted for new birth or newborn delivery. Anyone that is less than 18.

23:12

And as you can see, we had a number of discharges with missing values that were excluded.

23:21

And I think there is something to be said about when we grab on and hold onto these datasets.

23:29

The data, as you well know, comes with many different attributes.

23:35

We were looking at age, gender, race, total cost, length of stay, and discharge disposition.

23:45

What we found, and what we learned, was this: we came up with this schematic to sort of take a look at who has diabetes, who doesn't have diabetes, what are the core core morbid conditions that cling to, and with diabetes, is that obesity is at heart disease, isn't mental health.

24:08

We wanted to discern how this affected an infected those, based on their ***, based on race and ethnicity.

24:18

What are the total charges? Length of stay and again, as we mentioned, the disposition.

24:24

This representation shows the relationship between variables and outcomes of interests.

24:31

The population of interests was segued separated by these variables to represent sort of the inter circle.

24:38

The primary variable of interests core morbidity is presented in the light gray variables in the following dark gray represent a demographic variables while those in the black section represented the primary outcomes of interests which are in this outer array.

25:01

What did we learn?

25:03

We learned from our initial results, which took quite a bit of time, and hence, why this is important when we are designing algorithms.

25:15

Initially, we started out, as you could see, what, that very large dataset of over 47 million are, right, at 47 million.

25:23

We were not able to use all of the data.

25:27

We needed to take a step back.

25:29

We needed to do some data scrub. We needed to ask questions around the comorbidities and the coding of ICD codes that were in the data.

25:40

We learned that for a principal reason for hospitalization, was there disparities and outcomes for women and ethnic groups were persistent.

25:51

We learned that ethnic groups have poor outcomes and are less likely to have routine discharges from the hospital.

25:59

We learned that these disparities have persisted over the time horizon for the datasets that we had, suggesting that without conscious effort to personalize the care for women and diverse groups with diabetes, their outcomes are not likely to improve.

26:20

We also determined and concluded there could perhaps be a differential impact of diabetes, definitely between men and between women.

26:32

Homogeneity.

26:33

Inpatient and comorbidities was rare or is rare, given the variability of demographic characteristics. In other words, off times, an algorithm, or as models are set up, they are looking at a specific disease condition.

26:51

We wanted to know how did this impact length of stay and cost of care when other comorbid conditions can be present?

27:04

We then took a look at a second set.

27:07

Based on the diabetes study, we were interested in same sort of, oh, topology, they're looking at the impact of mental disorders on HIV.

27:19

By looking at these disorders, we came up with ICD nine codes at the time and really learned, and this is something that we also learned from the diabetes study.

27:31

When we're talking about data, when we're talking about how attributes are defined, when we're talking about fluctuation and changes in ICD codes, particularly in health care, net lightning tunnel study, what implications for the long war can be very difficult? We did run into these problems, particularly when we were talking about mental health.

27:58

What we learned, and you can see here in the bowl to my left, your right, We found out that there was the existence of non dependent abuse of drug drug dependence, depressive disorder, mood disorders, all the way down to schizophrenia that really creaked up and wait more into this model.

28:23

The results of our principal components analysis showed some of these disorders as primary conditions that actually had more weight in the model.

28:40

What did we learn in this case?

28:42

We learned, in this case, and this case, we learn longer, length of stay, and core morbid conditions do affect HIV patient outcomes.

28:53

Mental health disorders generally result in a decrease in both length of stay and total charges.

29:01

Patients with mental illnesses are more likely to be transferred to other facilities so that their true length of stay actually is not observed.

29:13

We found that there was actually some disparity when of this, when we looked at race and ethnicity.

29:20

The most important conditions that bubbled up for this model.

29:25

We're Mental health disorders and we placed the codes out here.

29:30

Mental disorders, mood disorders, depression, and anxiety.

29:34

The key takeaway that we learn is that health services, and health services, delivery, approaches to adherence and treatment, to better address chronic diseases, and their severity, along with the comorbidities are truly needed when it comes to race and race based medicine.

29:59

We turn to a more salient issue.

30:02

With another study, looking at, again, large data set. This time, using natural language processing.

30:09

And really looking at what have other studies done to inform us about how we deliver health care, particularly health care, preet cov it, I should note that this study is to college students.

30:24

What we learned is that the majority of the research was focused on, not on age, not on race, actually, but more so, what are the services that were needed?

30:39

Many, a study we're looking at. What are the services that institutions can provide?

30:45

And how they can provide them to address, in fact, issues of age and race and crime, and the aftermath of that impact of having a challenging experience on an institutionalist campus, as well as what are the issues around victimization. That leads to mental health in the first place when it comes to our college students.

31:16

When race inclusion or when race is excluded?

31:19

What we know, and this was mentioned earlier by doctor Jones, But I want to highlight some of this race blind algorithms may be well intended, race blind.

31:32

It oftentimes can heighten disparate conditions and experiences as we found with the study of mental health and the study of diabetes.

31:43

Race blind, it actually hides structural inequities that often go on, acknowledged in an algorithm race blind where health care costs as a proxy.

31:56

As we know Agha Miner and Group from the science publication the algorithm falsely concludes that black patients are healthier than equally sick white patients. Now there are fewer resources being spent on black patients who have the same level of need.

32:16

Why does all of that matter, and so what is missing in the discussion?

32:23

Healthy People 2020, points out the social determinants of health.

32:28

It's based on five placed base domains, and I think these are the domains that are critical, even if a team and I were to go back and look at our results from earlier years.

32:43

There is the economic stability of patient populations, variability, and education.

32:50

****, first is health care, Neighborhood and built environment.

32:57

I think, earlier, Doug Jones mentioned zip code, social and community context, um, very much in terms of discrimination, being a key issue in the social and community contexts domain.

33:15

While all of that is good, I do say, and I've often used this as a talking point, and try to include this in the research.

33:25

Then big data.

33:26

This was a note from The Washington Post.

33:29

Big Data was supposed to fix education, like there was a lot of hope placed on big data in healthcare.

33:37

It didn't fix education, it won't fix health care, but it's also time to have models that are sensitive to small data, small data being context, which are really important.

33:52

What I mean by that is, here's a topic that we took a look at about how do we engage?

33:59

And how do we engage both from an algorithmic perspective, but also from a cultural perspective?

34:08

While I won't meet won't permit me to discuss all of this, the My Health Care impact network dot org started out as a platform to have discussions with young people around health disparities.

34:25

The info graphics are out there to show what are the other issues salient up.

34:31

A binary on versus off grid versus black bear, black versus white, sort of construct.

34:38

What are the other issues that actually come into play when it comes to understanding health care and health care outcomes?

34:48

Historical context is critical.

34:52

Think about the time period of the story for which you are running that algorithm, telling that story. What events are critical?

35:00

We all know that we've had the trifecta of cold bit, an economic downturn, and sort of racial strife, here in the country.

35:08

What could the author, what could you do in terms of using a historical context in plot to impact your plot, your analyzes, your implications, your recommendations?

35:20

How does this inform the reader?

35:23

Historical context matters. It is not just a data issue.

35:28

I highlight these types of association biases that come into play when it comes to health care is not just the data set bias. There's association bias.

35:40

There's automation, bias, confirmation, bias, and interaction biases.

35:46

And, lastly, I will put up this.

35:49

This was actually released, as you can see, a few days ago, while there is a lot of talk about health disparities and what algorithms could do, health, equity, and health disparities. And the role of representation in this space do matter.

36:11

Particularly when it comes to data science and de colonizing data and health disparities, to tell the picture of who is designing the algorithms, what is the context and is, there are populations that are missing from the team.

36:34

And this is my last slide.

36:36

The lived experience an algorithmic bias.

36:39

There are models of fairness that exist out there, But I'd say it's small data, social determinants of health, place, and space.

36:48

There must be an ecosystem thinking.

36:51

Health care does not exist in a vacuum data sanitation, which we definitely learned in the studies that I've talked about.

37:00

The training data, the validation data, and the test data, all have implications for over and under fitting, which has impacts on populations.

37:11

And, again, context matters.

37:13

Augmentation of AI findings relative to how the results will be interpreted and used, and informing policymakers all centered around the concept of design, justice, sintering, race, in design, so that it will not be absent, and it will not be overlooked.

37:35

And with that, I will turn it over to you, and thank you for having me.

37:39

Thank you so much, doctor Pate, And, for such a powerful and insightful look at this, at least, really, genuinely complex issues. Our next speaker, mister Rajiv ..., is the Chief Digital Officer at ..., Inc. Mister ... experience spans over 20 years of innovation driven industry and social change across healthcare and technology. Under the leadership of Anthem CEO, Gale Boudreau, Anthem Embarked on what has been called a digital transformation just three years ago.

38:16

Mister ... is leading that work to help Anthem become a digital platform for health that utilizes a multichannel approach to connect consumers and providers. To achieve this transformation, mister ... drives the vision, strategy, and execution of Anthems digital first approach across the organization's digital, artificial intelligence, exponential technology, service experience, and innovation portfolios. Is re-imagining the future of health care by enabling Anthem to harness the power of AI and data. Today is going to talk about how healthcare organizations and plans can contribute to AI that advances health equity, Mister ...?

39:04

Thank you, Sherry pleasure to be here this afternoon with, with all of you.

39:07

And I was glad that doctor Jones doctor say preceded in my talk here, because it sets a great context to kinda how Anthem is looking at this very.

39:21

Deep.

39:22

And I would say critical issue at a population level, and the reason I say that is Anthem is the second largest insurance company in the US. We cover 45 million lives.

39:34

And as a result of our longitudinal relationship, what were the populations that we serve, we've got access to over 70 million claims data for over 70 million lives.

39:46

They've got access to clinical data for over 15 million lives, and we've got access to the lab data for over 18 million lives.

39:54

And we've got a wealth of socially oriented data on where people live, work, play, and their access to health related are the drivers to health related health issues, if you will, or social drivers related health issues.

40:11

And through all of that, and by no means is this, this number here that I've put up on the slide, is, is necessarily peer reviewed and critically validated.

40:21

But in all of the analysis that we've done, we've found that roughly 80% of an individual's health outcome is determined by where they live, as opposed to, a, let's say, their, their DNA, or any other health factor.

40:39

So, clearly, you know, all the things that widget we just talked about are critically important to sort of driving health equity, and, you know, using all of our techniques and all of our interventions at our disposal in order to address it holistically.

40:55

But, before we dive into that, let's maybe set some context, right?

40:59

So, you know, as I said, you know, a lot of the, you know, health related issues are driven by what, where we live, work and play, wherever we live.

41:09

You know, for instance, doctor fei talked about economic disparities and you know, other social factors, but breaking that down a level. What that means is, you know, where you live. Does it have access to affordable and nutritious food?

41:25

Are they adequate recreation and exercise options? Is there access to good medical care?

41:32

or they know about pollution levels in the air, and the water is a reliable transportation.

41:39

All these things have a huge impact on, on health, as do where we work. And that's the place that you work.

41:50

Does it provide health insurance coverage? Does it provide and focus on health issues?

41:58

Connecting in primary type of needs, or behavioral health issues, as well as looking for, for all the things that create, you know, stress and other things in life?

42:09

Leading to health related outcomes and health related issues, And ultimately, in a social factors of health, our friends or family, or lonely? Do we have a good support system?

42:21

And what kind of behaviors is that driving healthy behaviors, as they're driving unhealthy behaviors like eating unhealthy foods, drinking and smoking, all of these things have a have a role to play in in our health outcomes.

42:40

So, the role of artificial intelligence there, right?

42:42

So, given, given the wealth of data and given the fact that data is growing at an exponential pace and in our own research shows that anthem's dataset roughly doubles every 73 to 80 days.

42:58

So, it's impossible for humans to keep pace with the exponential growth of this data.

43:05

So, enter. artificial intelligence. It's, it's a long, well established field, It's been around for 40 plus years.

43:12

And it's, it goes more and more mature, you know, by the day, by the week, by the year.

43:17

But, a lot more to be, you know, done in terms of responsible, and the ethical use of artificial intelligence, in order to address the very, many things that we're talking about.

43:29

So, how do we actually apply AI and how are we correlated back to, to the issues that I just just reference around, driving more health equity and improving access to care and using all of this data ultimately to drive better health outcomes.

43:45

So here's just a few examples of how Anthem is is, is making that happen.

43:50

So, first off, we've got something that we call our data platform for social determinants of health and and that platform, and you can click on that URL to get a sense for what that looks like for you in a public setting.

44:04

But the private version of that combines where we live, work and play, and all of the associated data on that.

44:10

With our clinical data, with our clinic, with our claims data, and our labs, data, and, increasingly, data from our devices to create a number of predictions.

44:22

And today, we're able to, you take roughly about 4000 unique things about a person's future health care journey, Everything from the likelihood of developing a opioid substance abuse disorder, or developing a chronic condition like hypertension or diabetes in the future.

44:41

two, the risk of falling, the risk of skipping an appointment, the risk of missing, a critical, no gap in care, and ultimately, suffering the worst consequences of all, as a result.

44:54

So, what do we do with all these wealth of insights?

44:57

Collectively, we have over two billion such insights and there's two ways in which we're activating and making use of these predictions.

45:06

one is through our partnership with our, with our Provider network, which comprise the primary care physicians, caregivers, know, physician extenders and nurse practitioners, of course, hospital systems, home health providers, and the entire gamut of the health care care delivery infrastructure.

45:28

And through our relationship with a broader care delivery infrastructure, we're integrating our insights into the electronic medical records and other points of data and workflow in physician's office or hospital system to get them to see the whole picture of health. And those are oftentimes clinical insights.

45:51

But more and more, thereabout, no social insights about net relate to fairness, equity, and conclusions that that physicians might drop purely based on race, ethnicity, and gender.

46:06

And, you know, some of the basic things that are used today, but looking beyond and say, does this patient that I'm seeing, have access, transportation? Come see me for my next appointment.

46:16

Or does this person have enough time in their day to spend their time exercising and sleeping well?

46:23

and, and looking after themselves in a way that met with her dress, You know, there, their medical and their behavioral, or social well-being.

46:32

You put all, put all of that into the hands of the clinician so that not only can they, you know, treat what's presented, but also look beyond and look at the whole picture of health and may not take care of that in their workflows.

46:47

So while presenting this information itself is useful, what we've also come to realize is that, to truly make this actionable and have the providers be a part of those, this closed loop sort of mechanism for taking care of these interventions is to actually incent them to do just that.

47:09

So to do that, we've created and expanded our value based care programs where we align the incentives and the outcomes for each provider based on the number of actions that they're taking on the insights that are being created by our algorithms.

47:25

And we want them that we want both the feedback on what's working and what isn't.

47:29

So if they're taking action and they're seeing an improvement, we want that feedback to come back to us so that we can continue to optimize our algorithms.

47:38

as well as if they feel that the insight that we're surfacing is not relevant and is not appropriate for that particular patient, We also want to understand why that's the case, and then also use that, that continually optimizing refine our algorithms.

47:53

So that's, so think of that as like one bookend of a platform that needs to be in place.

47:59

The other is the direct activation with our, with our members, our consumers, which happens through our digital apps and our assets, as well as our clinical and customer service teams, which, which interact with, with our consumers.

48:12

And, you know, as you would imagine, as a large insurance company, we get, you know, hundreds of millions of interactions that happen.

48:21

You know, some that haven't digitally, about 60 to 70% of our interactions with our members happen through digital channels, and about 30% of them happen through our, or our contact centers.

48:32

So regardless of what channel our members are reaching out to us on, or we're reaching out to them through.

48:38

The, the intent is that with every interaction, we want to understand the context in which we are resolving an issue.

48:45

So, if A customer is calling us about a, let's say, an administrative issue about the status of a claim, or a particular benefit, or a co-pay or what have you, we always want to correlate that to, you know, what is the related set of docs that we should be connecting, you know, today?

49:03

Are they missing an appointment or are they programs that we should be enrolling them into and making them aware of it so that we're raising the education level of the collective membership that we're caring for and serving, as well as we're providing recommendations to our provider network on the specific actions that they ought to be taken.

49:26

And in so doing we want to create this virtuous cycle where providers are incented to do the right thing based on our insights.

49:33

Our members are also incented through, for example, eliminating kobe's and providing rewards and loyalty program and doubling points, in some cases, to take certain actions, based on the whole set of insights that our algorithms are surfacing.

49:52

But in so doing, there's a critical dependency on making sure that we're accounting for things like bias.

49:59

We're accounting for data quality issues in our underlying datasets to make sure that the insights that we're servicing are actually representing above to complete population, that we're not missing anything because we're simply using machine learning techniques to learn from perhaps, what's an embedded bias in the system already.

50:18

So, you know, to that end, we've created something that we call The Office of: Responsible Use of AI and Ethics.

50:26

And there's this group continuous, it looks at data, you know, tests for bias as well as use of several other techniques to make sure that these things are used are responsible and scalable manner.

50:39

So, in a future that is so, so much technology centric and is going to be leveraging data and AI in such a scale manner, our view is that we've got to use technology in a way and AI in a way that elevates the human experience.

50:57

So to that end, our strategy is to really use these technologies to automate as much of the, the administrative process in our system as we can freeing up enough time across the system.

51:09

Whether it's the physician's time, freeing them up, from having to rely on their electronic medical records, you know, more than that.

51:16

Or any of the 85,000 people are approved for Anthem to focus Almost exclusively on what are the things that need to be considered in balance of all health.

51:27

And spend their time there versus resolution of a claim status or a bill, an issue, or Ross, that needs to be decided on.

51:36

All of those things could be automated and decided on, decided on by algorithms, and then the rest is where we want to focus our time on.

51:44

And so, doing, you know, elevate the, you know, the human to human interaction, and the human connection.

51:55

So, with that, I will turn it back over to you sharing a Thank you for your, for your time and appreciate being on this panel today.

52:03

Thank you very much mister ... and doctor. I really appreciate your presentation doctor Jones and mister ... going to be able to stay with us. Doctor Patton. If you have a moment she Attach a patient has to leave us exactly at two o'clock. one of the questions that that was directed to you, that that was asked by several people, is, what is the most effective way to include data about communities at the community and grassroots, diverse level in AI systems?

52:38

Yeah, I get asked that question all the time, I think, yeah, I would say this, There are a number of strategies that you can use, and one is, one is a very ground rules approach, grassroots approach, you need to involve community and the process.

52:57

You know, the Urban Institute has laid out.

53:01

I think some very systematic steps from the research, um, but they have done to sort of outline how do you get community involved. So I think community involvement is, is one.

53:15

I think secondly, it requires Gagne Trust, not assuming that trust and that trust, building, sharing, is a process.

53:26

Right. And I think that is clearly important.

53:29

I think having more inclusive, diverse people, designing, implementing, interpreting, creating policy is very important.

53:46

Because, you know, one of the things that doctor ...

53:50

mentioned was no one's zip code and understanding the zip code.

53:57

What does that mean?

53:58

I'll give you just just one little simple data point, then I'll stop.

54:02

Um.

54:03

I would hate for someone to have made decisions over the course of my lifetime based on the zip code that I grew up in.

54:11

I was most likely, and friends, and I always talk about it.

54:15

We wouldn't be here.

54:17

We wouldn't be on the platform, We still be subjects of studies.

54:23

And so, I think, understanding contexts, inviting community to be in this idea.

54:29

And this is a very simple one, which is somewhat takes a lot of work, data literacy, and democratizing the data such that community understands what the data mean and don't mean is, is, is very important in these days.

54:47

The next kind of literacy divide, in my opinion.

54:53

Thank you, doctor Pate, and really appreciate that. And, thank you again for joining us today. Doctor Jones. We've had several questions about what type of data to use.

55:07

And we also have a question about the quality of data, overcoming the lack of, of appropriate, self reported data. And assessing and correcting for bias in data that scales to larger data sets. Could you address that?

55:26

Yeah, the, it sounds like the data we want to use is the kind of data that insurance, Insurance companies, for instance, have.

55:33

I'm gonna, when I heard ..., I keep talking about the kind of data they had access to. I think there are a lot of researchers who are very jealous, very much like to have that kind of data.

55:45

Because what you need, you don't want to just have a few data points to judge someone's medical faith on the basis of their skin color, is clearly wrong, as Peyton was saying to reduce so much of their zip code is clearly wrong.

55:58

What you need is multi-faceted data on many, many factors of a person's background. Possibly their ancestry. Not just where they live now, but where they have lived previously their education. There's lots of stuff you need to know.

56:12

Lot of medical researchers will say this, it's too hard to collect all that.

56:16

But, there are people who are managing to do that. There's a tremendous amount of information that's in health datasets, whether held by hospitals or insurers, and we need to get better at using it. And it sounds like Anthony, at least, is taking good steps towards doing so.

56:31

The other thing we have to be careful of is not generalized.

56:34

And just because people who live in one area are often, in a certain way, doesn't mean that everyone who lives in that zip code is that way, Just because there are some traits that are more common in people of color than people who are white. It doesn't mean, we should assume that all people of color have those traits.

56:50

So, we need to collect very good data, very expensive data, and it needs to be very careful on how we apply it to specific individuals.

57:00

Thank you, And thank you, doctor Jones. For your overall presentation and perspective, We have time for one last question.

57:12

And, mister Anthony, that we have a question about, how can a company develop a culture that that addresses bias and data and learns to cut to collect data in an unbiased way? Could, could you address that question?

57:30

Yeah, absolutely. And to maybe answer a question that, perhaps, doctor David Jones was, was asking, We do have something that we call a data sandbox.

57:42

That is a subset of our data, is available to researchers.

57:45

So, if anyone's interested in researching on our datasets, please do reach out, and I will be happy to, to onboard the onto that.

57:55

As far as the culture question, Shuri, it's, it's, it's about, I think, starting with the assumption that there is bias in everything we do, and it's always existed.

58:07

The issue that we have with, you know, the present state of technologies is that we can scale it very quickly, and that bias switch.

58:15

It was limited by human scale before, When it translates to machine scale, could reach hundreds of millions of millions of people in an instant.

58:24

And that's the danger, you know, so I think what we do at Anthem is to assume that every every single thing, whether it's automating a business process, or providing a recommendation to a clinician on a particular care related topic.

58:39

We assume that there is a inherent bias embedded within whatever were developed.

58:45

So what we then do is, with that assumption, we test for, how do we improve that And part of it is through understanding the quality of the data.

58:55

and filling in gaps, you know, with synthetic and other data sources, that's where needed.

59:00

And then being willing to pull the plug on initiatives that don't pass muster regardless of the financial cost at the end of the day and it's the hard things easy thing, that's a hard thing to do.

59:16

Because there's a lot, when I want to kick off a project and you've invested hundreds of thousands or millions of dollars into it, then as you discover that, you know, perhaps, you know, the quality assumptions are great, or the quality of the data is to a level where we can use that at scale.

59:33

Then, the responsible thing to do is not roll it out and wait until, you know, we remediate issues and address it.

59:39

And that oftentimes is the hardest thing to do at a big enterprise, which is to stop an initiative and kill it or, or adjusted and pivoted. Or if something is already in production and you discover an issue and you gotta take it offline.

59:52

And clearly you can imagine the, the, the co-ordination and the logistics that are needed in order to make something like that happen.

1:00:00

Not to mention the regulatory legal and other issues to have to deal with as well.

1:00:06

So I think education, educating all the right stakeholders, being transparent and open, enlisting the help of the ecosystem.

1:00:15

So not all the answers are within a company, but I think the broader community can, can help a lot in addressing those issues.

1:00:21

So enlisting a broader ecosystem can often help.

1:00:27

But it starts, I think, with the mindset that, you know, the whole space is a work in process, and, you know, there are a lot of lessons to be learned, and how, how to make this happen.

1:00:38

And be willing to accept a sign of failure.

1:00:42

In an algorithm which is perhaps interpreted as the presence of bias, means that, you know, you now have something tangible to act on.

1:00:50

So it should be viewed as learning, as opposed to failure and incorporate it into kind of future iterations and future generations without algorithm.

1:01:00

So, it's a lot more work that he's done.

1:01:02

That's one that I'd say, that everyone should be looking at a community ecosystem outside the boundaries of anyone, enterprise, you know, based approach, because this is a far too important issue, to be addressed, just by one company.

1:01:16

Thank you very much. Unfortunately, we are out of time for Q and A. First, I want to thank our wonderful speakers for taking time out of their schedules to be with us today. And, I want to ask our audience to please take a moment to share feedback on the event, when our survey pops up, and also visit the our website to look at our extensive Public Health Resources later this week. You will all be getting an infographic on the topic of today's webinar, to share or republish. In a few days, you will get a copy of the webinar and please look for our upcoming webinar on the impact of Long COVID on Health. Thanks again for sharing your afternoon with us.

Speaker Presentations

David S. Jones, MD, PhD

Ackerman Professor of the Culture of Medicine, Harvard University

Fay Cobb Payton, PhD

Professor of Information Technology/Analytics at North Carolina State University

Rajeev Ronanki

SVP & Chief Digital Officer, Anthem, Inc.

This webinar is the fifth part of the series "Stopping the Other Pandemic: Systemic Racism and Health."

More Related Content

See More on: Health Equity | Artificial Intelligence